If efficiency programs were telephones, the evaluation community would still be using wall-mounted analog dial-ups rather than the iPhone. Yes, I’m going to tell you why programs are designed to be evaluated and not to be effective, part 2, herein.

The following is the list of flaws in demand-side management theory, as presented last week.

- Efficiency must cost more than inefficiency

- Building energy codes are sacrosanct

- Efficiency has to be the primary factor in customer decision making

- Customers must “get their money back”

- The unfamiliar get fifty cents on the dollar

- Immortality is fantasy

Last week we covered the first two items. This week I explain the other four flaws. Many of these flaws are intertwined, as I shall explain.

Efficiency as the Primary Factor

Programs are designed to be “evaluable” so they can receive a good grade at the end of the exam (evaluation). First off, this adds a ton of cost to the delivery, as I explained in the recent pay for performance rants one, two, and three.

Last week I wrote that fossilized policy and norms require that efficiency costs more than inefficiency. This decrepit logic is rooted in the widget era. E.g., we will install brand new bright lighting, and the beneficent utility will chip in to make the simple payback two years sooner for you. Want some? The evaluator pins the attribution[1] tail on the donkey to establish whether the decision was influenced or controlled by the herring, aka the incentive.

To sell efficiency in the modern era, many times we must sell by stealth. For example, tell me about your business. What are your pain points and problems? Oh, tell me more about that bottleneck.

See where this is headed? We sell efficiency by selling solutions to problems. The customer may give a rip about the energy saved. That is a coproduct. The Jurassic evaluator might gash such a smashing success with a 20% attribution rate when there is no way it would have happened without the assistance of the program.

Customers Must Get Their Money Back

A customer complains about their efficiency surcharge or rate increase. They are advised to participate in the program to “get their money back.” The customer is influenced by the program to do paperwork, but not do what they would otherwise do. I’d bet my bank account this project would get a similar attribution score as the one described in the next section.

Moreover, another fossilized paradigm is that the population of customers must be the beneficiaries of customer contributions to the tune of about 80%. Why? Here is a secret. Most businesses, as described in the prior section, care little about the herring, or the energy impacts.

Consider new construction programs. Last week I wrote that current old-school programs are 80% ineffective – taking credit for the sunrise – doing things the trades do everywhere. I am here to tell you; the trades are the barriers and the gatekeepers of progress. To move the needle, a lot more incentives need to go to design and construction firms, and NOT the customer. This should all happen behind the scenes to the customer. In other words, it works like an invisible upstream program. Yes. That is what it is.

Fifty Cents on the Dollar

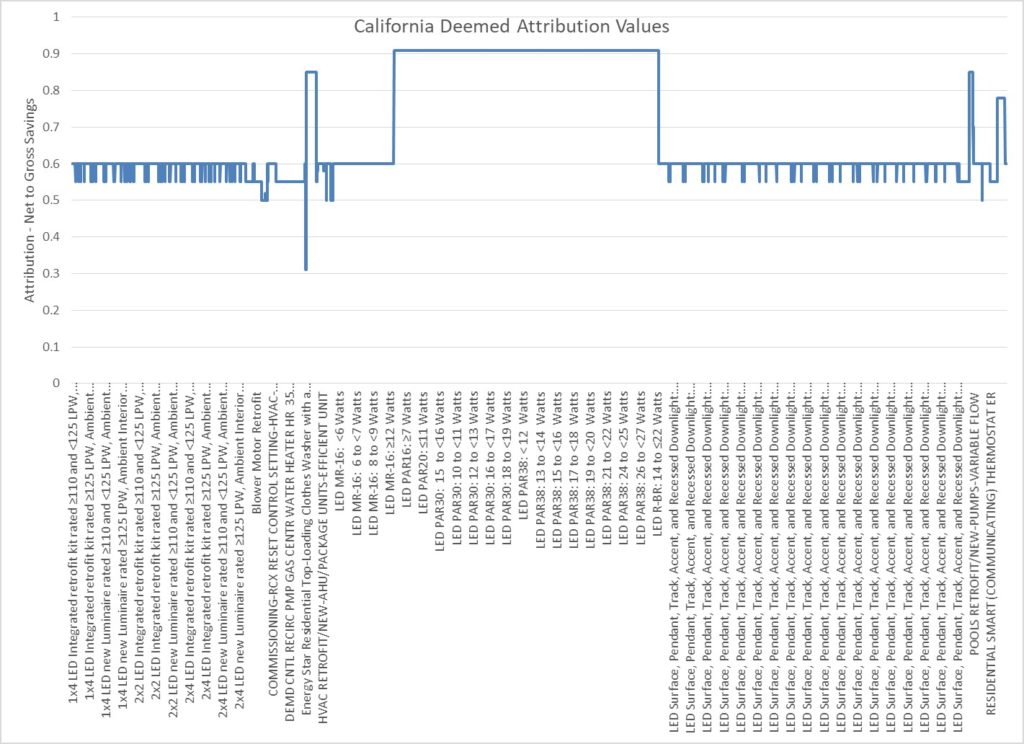

The chart below shows deemed attribution rates for 6,605 measures in a California portfolio. You’re welcome.

Conclusion: only bizarre (minus 80F freezers) and obscure and expensive lightbulbs are rewarded with high attribution rates. Everything else is 55% or 60%.

Can I ask a question?

What is the attribution of a kind neighbor frantically communicating to me to stop backing my car out of the garage so I don’t run over my computer? It’s happened before, but not to me. Trust me.

In such a case, I’m doing my thing, going to work. From my perspective, I am clueless as to what is about to happen. The intervenor can see exactly what is going to happen. How is my stopping as a result of the intervention not 100% attributable to my neighbor?

How is retro-commissioning then, not almost 100% attributable to the program, rather than the pathetic 60% in the chart below? (as one example) The customer doesn’t know he’s running over computers every day. The crunching noises are a little annoying, but he doesn’t know what it is. Only the intervenor can see what is happening and this stuff would never stop if not for the intervenor and program. Period.

Immortality is Fantasy

Finally, we must purge the 40-year-old bullheaded notion that 40-year-old technologies, by the millions, are wasting energy in thousands of buildings. This equipment, and their owners, are left behind, stranded, because incentivizing customers to replace such relics is a free rider. Jurassic attribution dogma strikes again.

Program implementers skip these projects because the dogma does not reward replacing terrible with good[2]. It only rewards good to great. But see the 1/x principle I wrote about 5.5 years ago. While we approach the wall of diminishing returns on incremental efficiency now and into the future, we ignore the vast seas of opportunity in stranded savings of cast iron technologies.

Conclusion

Entrenched program evaluation routines and policies are becoming more irrelevant every year to the point they stifle impacts more than improve them. Like any other change, we need to step back, ask ourselves, what are we doing(?) – after ditching ALL doctrines of yesteryear.

[1] Attribution is the percent of savings that are awarded to the program for the program’s impact on making the efficiency measure happen.

[2] Good = up to codes and standards of efficiency.