Every day, there is a headline about the explosion of data center growth and associated electric loads that will rapidly deplete reserve capacity on the electric grid. The Energy Rant featured several posts to describe the magnitude of the issue and concern among regulators, utilities, and government officials, most recently from the Mid-America Regulatory Conference and Syncing Power Generation with Soaring Loads.

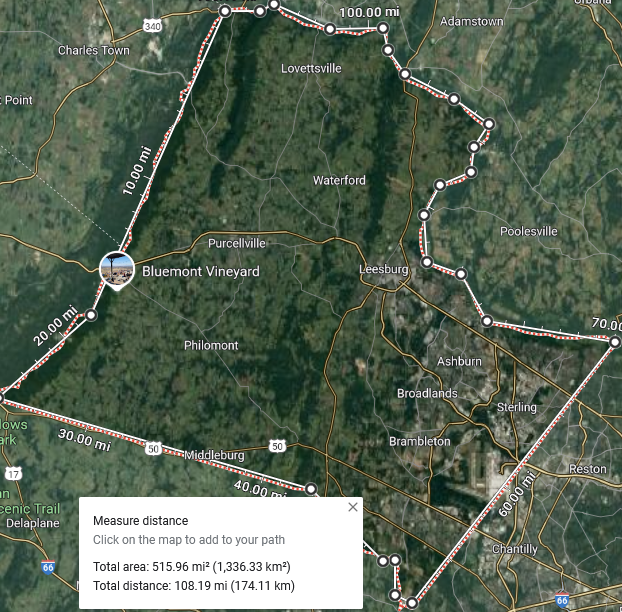

Since load management and reliable and affordable electricity are near and dear to me and because Michaels has been successfully engaging with developers of monster data centers, I pounced on the opportunity to attend the inaugural Data Center Frontier conference last week. It was held in Reston, Virginia, the heart of the world’s largest concentration of data centers.

Disclaimer

Caution: Jeff tripled his knowledge of modern data centers in three days last week. The information in this post was gleaned from statements at the conference and corroborated with information from the WWW. Computers, servers, and software are not his glowing cerebral strength.

Data Center Loads: Fourth Power

Data center loads are growing by the fourth power. First, construction is growing exponentially, and computing power and density are increasing exponentially within each facility. The computing power of server racks is not growing in ten-percent increments. It is exploding exponentially, as shown in the following chart, which I recreated from a blurry photo of a slide from the conference. It features data from chip maker Nvidia, the third largest company in the U.S. by market capitalization, depending on the day. It is among the top four, along with Alphabet (Google), Microsoft, and Apple – larger than peon Amazon. On one bad day last week, Nvidia lost more stock value than the totals of Pepsico and Chevron – just for scale.

Figure 1 Chip Computing Power Progression

Racks

Racks

Rack densities in terms of computing power, energy consumption, and weight are also exploding. A rack is what you might expect: 750 mm wide, 1200 mm deep, and at least 48 U high. A U is a rack unit 1.75 inches thick. The cabinet is, therefore, about 29.5 inches wide, 48 inches deep, and seven feet tall. Readers can visualize that. It’s not unlike a large refrigerator.

Would you like to guess the power density in that refrigerator-size rack? Well, do it anyway. An internet search reveals 100-600 Watts per blade server and maybe a total of 5-10 kW per rack, as shown in Figure 2 below, courtesy of Delta Power Systems. Right now, racks are in the 70 kW range, just above what air cooling can provide. The industry has its sights set on 250 kW, all the way to 1 MW per rack in the next decade.

Figure 2 Historic Rack Power Trend

The 20,000 TFLOP point in Figure 1 above represents the modern Blackwell configuration (Figure3) of 18 racks and 1.2 Megawatts of power on a 560 square-foot print for a mind-blowing power density of over 2 kW per square foot. That’s 200 MW per 100,000 square feet. Each rack weighs around 4,000 pounds. You read that right.

The 20,000 TFLOP point in Figure 1 above represents the modern Blackwell configuration (Figure3) of 18 racks and 1.2 Megawatts of power on a 560 square-foot print for a mind-blowing power density of over 2 kW per square foot. That’s 200 MW per 100,000 square feet. Each rack weighs around 4,000 pounds. You read that right.

Figure 3 Blackwell Pod Design

The next-generation configuration is called the Rubin. At 40,000 TFLOP, the Rubin design is expected to weigh four to six tons per rack. That’s weight and not cooling load. Considering these servers’ weight and power density, raised floors become unworkable.

The next-generation configuration is called the Rubin. At 40,000 TFLOP, the Rubin design is expected to weigh four to six tons per rack. That’s weight and not cooling load. Considering these servers’ weight and power density, raised floors become unworkable.

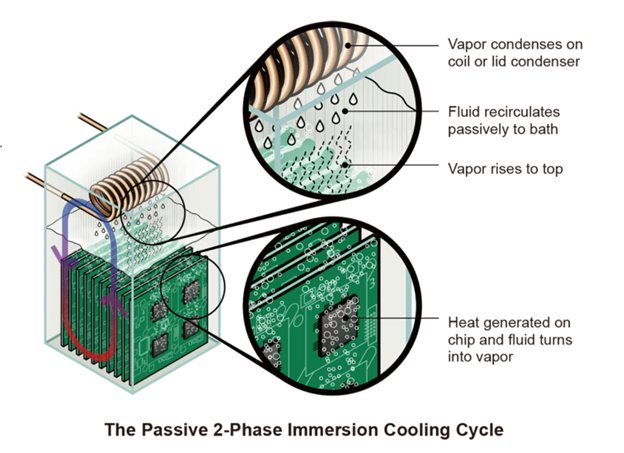

Chip Cooling

Server cooling is moving from single-phase immersion cooling to two-phase immersion cooling, a brilliant cooling solution. The cooling fluid for the two-phase method is a refrigerant in direct contact with the hot chips, not to be confused with the band Hot Chip. Evaporative cooling provides many multiples of cooling capacity compared to single phase, and the cooling fluid is stubbornly and reliably constant at 50 degrees C, or 122 degrees F. Figure 4 provides a nice cartoon, and here is a link to a video of it in action.

Figure 4 Two-Phase Immersion Cooling Cycle

AI 101

AI 101

I found this article, AI 101: Training v Inference, provides a straightforward explanation this simpleton can understand. I realize how incredibly efficient the neural networks in our brains are compared to these massive data centers. Our brains use a measly 20 Watts[1]of power or energy burn.

Figure 5 Brain Networks

That’s the micro level. I’ll leave off with the macro level until I drill into data center facilities next week.

That’s the micro level. I’ll leave off with the macro level until I drill into data center facilities next week.

Mega Perspectives

According to a Dominion Energy spokesperson, the world’s largest concentration of data centers in Northern Virginia has a connected load of 3.3 Gigawatts today. For scale, one large commercial nuclear reactor produces one GW of electricity. Dominion has 50 GW of requested load additions from data centers on the drawing board. That’s 2.5 billion brains worth of computing power. Think about that.

Fifty GW is easy to fathom, considering a single campus can top 1,000 MW, or 1 gigawatt, on less than 200 acres, or 0.3 square miles [2].

I see a big problem. How in the world will they get 50 nuclear power plants built and wired to ~150 substations for these facilities?

Just for fun, 50 GW would require all of Loudoun County to be covered in solar panels [3], sparing Dulles airport. Since solar has only a 0.23 capacity factor, Fairfax, Prince William, and Arlington Counties would also need to be blanketed by solar panels to power these data centers around the clock (unicorn batteries not included).

Figure 6 Five Hundred Square Miles of Loudoun County

Next Up

Barring something even more spectacular than data centers coming across my senses, the next two posts will feature:

- Data center facilities, efficiency, and programs

- How to meet the load growth of data centers – a reality check

[1] 2000 kcal/day is an average of 100 W and the brain uses about 20% of the body’s energy https://www.brainfacts.org/brain-anatomy-and-function/anatomy/2019/how-much-energy-does-the-brain-use-020119?ref=noahgerman.com

[2] Source = confidential

[3] Five acres per MW, https://seia.org/initiatives/land-use-solar-development/

Racks

Racks AI 101

AI 101