I couldn’t pass this up to break from data centers for a second. One day last week, I read in the morning from the American Energy Society, “California Governor Gavin Newsom is trying to get control of wild gasoline spikes.” In the afternoon, I read a headline from Hart Energy, “California Sues Exxon Over GlobalPlastic Pollution.” It’s like beating a dog to motivate him to roll over.

Aircraft Carriers and Data Centers

I had the good fortune to tour a data center under construction a few weeks ago in Northern Virginia. It was a three-story, one-million-square-foot facility. The only way I could describe it was something out of a sci-fi movie or something in the size and scale of an aircraft carrier.

While interning at Newport News Shipbuilding, I was able to board the USS Abraham Lincoln CVN 72 aircraft carrier for a self-guided tour just before it was christened and delivered to the Navy. In those pre-9-11 days, my intern buddy and I had full access to almost everything on the carrier. The hangers below deck and power requirements of the carrier compared to the data halls and power requirements of the data center. Nimitz-class carriers like the Lincoln produce 260,000 hp or 194 MW of propulsion. The anticipated electric load for the data center we toured: 180 MW. Spike the football here.

Figure 1 USS George H.W. Bush, CVN 77

Referring to last week, using an efficient power usage effectiveness (PUE) of 1.2, the cooling load of the facility we toured would be just over 50,000 tons. That’s pretty close, but assume growth in cooling loads for the facility’s life because as described in the first part of this series, one megawatt cabinets are already on the horizon. So, let’s assume 75,000 tons of cooling or 900 million Btu/hr. For scale, the cooling capacity of this one data center is equal to that of:

Referring to last week, using an efficient power usage effectiveness (PUE) of 1.2, the cooling load of the facility we toured would be just over 50,000 tons. That’s pretty close, but assume growth in cooling loads for the facility’s life because as described in the first part of this series, one megawatt cabinets are already on the horizon. So, let’s assume 75,000 tons of cooling or 900 million Btu/hr. For scale, the cooling capacity of this one data center is equal to that of:

- 30,000 homes[1]

- Half the capacity of Chicago’s district chilled water plant that serves 53 million square feet of office space downtown. [2]

- Twice the capacity of Minneapolis’s district chilled water plant

- Thrice the capacity of Denver’s district chilled water plant

Now that I’ve established that we’re dealing with colossal cooling loads, let’s look at the wide range of cooling systems and the efficiency of serving these loads.

Direct Expansion

I won’t name any names, but when I see rooftop units on a data center website without mentioning efficiency features, I think I am looking at lowball first cost and energy efficiency. This is not uncommon, and an investment model that works for particular objectives.

Figure 2 Direct Expansion Rooftop Unit Cooling

Direct Expansion, Pumped Refrigerant Economizer

Direct Expansion, Pumped Refrigerant Economizer

Some data centers feature direct expansion with pumped refrigerant economizer. This technology is like a home’s central air conditioning unit, except when it’s cold outside, phase change to move enough heat is not required. Refrigeration compressors can be turned off, and less energy-intensive pumps can be used to transfer heat with liquid refrigerant. The temperature difference is enough to handle the required heat transfer using liquid refrigerant, not unlike a radiator that keeps the car engine cool. This is also known as a dry cooler.

Air-Cooled Chillers and Air-Side Economizers

Air-cooled chillers are generally less efficient than water-cooled chillers. This may be due to the markets and use cases (low first cost) to which they are applied. This Vantage Data Center in Northern Virginia features what appears to be floating head pressure for the refrigeration cycle (lower outdoor temperatures allow for lower condensing pressures and less compressor power with the appropriate controls) and an air-side economizer.

Water-Cooled Chillers

Water-cooled chillers provide the most efficient cooling, probably in the PUE range of 1.2, particularly with water-side economizing. Water-side economizing uses evaporation to reject far more heat when conditions are dry, as I described comparing Arizona heat to Miami heat (not the NBA team) last week.

Evaporative cooling works great above freezing outdoor air temperatures, but things can get a little tricky when making 40F water when the outdoor temperature is below freezing. In those cases, dry coolers may be added, and since that is a closed-loop system, antifreeze is used in the working fluid to prevent freezing. Giant plate and frame heat exchangers transfer heat from the chilled water loop in the building to the glycol/antifreeze loop that rejects heat outdoors.

On that note, data centers can consume vast amounts of water. A massive data center planned by Google in Mesa, AZ, may use up to 4 million gallons daily. Per my calculations, that is about 116,000 tons of cooling, maybe enough for 1.5-2.0 million square feet of data center.

There may be plans to ban water use for data center cooling, but this may be shortsighted. That decreases the cooling system’s efficiency, requiring more power and electricity. Where does the electricity come from? Maybe water-cooled power plants, and if not, they are less efficient, consuming vastly more fuel and likely carbon emissions. I see a graduate thesis in the making here – a perfect subject.

Efficiency Programs and Load Management

Dozens of gigawatts of data center loads are being added to the grid each year, while some data center programs, and especially program evaluators, look at the market and say either of two absurdly preposterous things:

- Data center developers, investors, designers, builders, and owners do what they will do anyway, so you have no incentive.

- Data centers consume massive amounts of power (demand) and energy, so they don’t need incentives for efficiency.

Well, duh! Behold the wide range and efficiencies of these systems described above and under construction today.

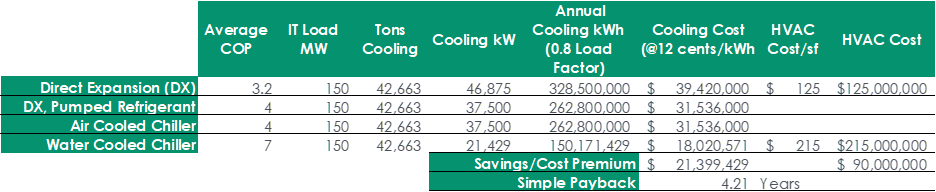

Consider a million-square-foot data center with an estimated 150MW electric load. I made reasonable assumptions of annual weighted efficiencies of the above-described systems and used cost estimates from Dgtl Infra Real Estate 2.0 to generate the following analysis.

Table 1 Cooling System ROI Comparison

We have a four-year payback on systems that last 20 years for air-side equipment and 25 years for chillers and controls. Additionally, data centers like to tout their sustainability, which may blow these economics out of the water.

We have a four-year payback on systems that last 20 years for air-side equipment and 25 years for chillers and controls. Additionally, data centers like to tout their sustainability, which may blow these economics out of the water.

Are you, Mx. Commissioner, going to allow the most significant opportunity in decades to reduce cost for ratepayers to slip away, or will you leave it to program evaluators who assign an absurd 6% attribution to data center projects? Why not 4%, or 8%? No. It is precisely 6%.

We must do something about this! I just demonstrated a reasonable estimate of 20 MW savings for one data center! A Virginia Class Submarine produces 30 MW of propulsion. Uncanny.

Figure 3 USS Virginia, SSN 774

[1]2.5 tons peak load https://web.ornl.gov/sci/buildings/conf-archive/2004%20B9%20papers/129_Wilcox.pdf

[1]2.5 tons peak load https://web.ornl.gov/sci/buildings/conf-archive/2004%20B9%20papers/129_Wilcox.pdf

[2]Rule of thumb 400 sf per ton verified: https://preferredclimatesolutions.com/how-many-tons-of-cooling-per-square-foot-do-you-need-in-a-commercial-building/, https://www.contractingbusiness.com/commercial-hvac/article/21133202/commercial-service-clinic-load-calculation-rules-of-thumb

Direct Expansion, Pumped Refrigerant Economizer

Direct Expansion, Pumped Refrigerant Economizer