Datacenters and data rooms consume 2% of the total electrical consumption in the US and are likely to continue their rampant growth. While cooling, uninterruptable power supplies and other factors contribute to the high electrical demand, the primary source of power consumption comes from the servers themselves. One of the easiest and most cost effective methods for reducing wasted server energy is virtualization.

Server virtualization software allows for multiple physical servers to be combined. The virtualization software essentially splits them apart so the software all runs independently, but the computing power is shared by these independent software levels.

A simple analogy for how server virtualization works would be to imagine someone’s computing needs at home. Imagine someone whose computer needs were limited to email, surfing social media, listening to music, and playing an occasional game. They wouldn’t buy a separate computer to listen to their music while they send a few emails, would they? Of course not. Well, non-virtualized servers generally do just this. Virtualization allows one server to manage multiple tasks, which is great because computers are very powerful today!

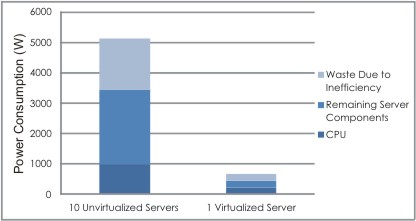

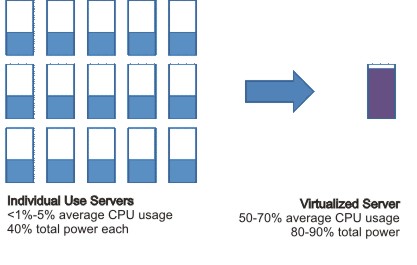

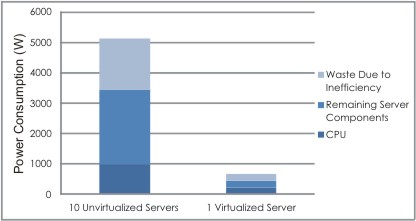

Depending on what function a server provides, most modern single application servers (like a dedicated email server) are vastly underutilized. These un-virtualized servers tend to sit nearly idle a majority of the day, with less than 5% of their processing power doing all the work while consuming 40-50% (or more) of their full load power!

The power supply adds another layer of inefficiency. Since data centers require no hiccups in power flow in the event of a grid failure, all energy flows through batteries which instantly pick up the load in case of power failure. Traditionally, to do this, power feeding the servers in a data center is converted from alternating current (AC) from the grid to direct current (DC) to an uninterruptible power supply containing batteries. From the batteries, power is converted back to AC for distribution in the data center, and finally, just like every computer, the AC power is converted back to low voltage DC. Each of these four conversions of power along the way includes 5-10% loss. All that wasted electricity ends up as space heat, which will need to be removed from the building. That requires more energy.

Just to be clear, that’s wasted cooling energy that’s caused by wasted distribution energy that’s caused by wasted server energy. Is that OK? Luckily, most people are not OK with it, which is why so many servers these days are being virtualized.

Depending on the requirements of the server applications, virtualization may consolidate upwards of 15 servers onto a single server. For example, the two setups in the chart provide the same services for a data closet. To achieve savings of this magnitude, rather than <5% processing power, virtualization can target 50% or higher average CPU utilization. So, rather than using 10-20 servers at something around 40% power, a virtualized server uses just one server at closer to full power. Once the waste due to the other inefficiencies is included, the choice becomes a no brainer.