An important segment of Demand Side Management (DSM) program evaluation is the determination of gross program impacts[1]. Gross impacts are generally evaluated using project file desk reviews, on-site measurement and verification, or a combination of the two. The question that arises during evaluation planning is often, “how many of each type are to be completed?” Does utilizing on-site impact evaluation activities produce results that are significantly different and meaningful as opposed to a file review?

Throughout the years, Michaels has completed hundreds of project evaluations using these two different methods. The first is a desk review of project documentation to verify that the information on quantities or types of equipment are consistent with what was outlined in the program documents. The desk review can also be supplemented by a phone interview with the customer or vendors to obtain some additional details that may be missing. The second is fieldwork that involves traveling to the customer’s facility, meeting with them in person, visually examining the equipment, and often times installing data logging equipment to monitor key parameters over time. The onsite version of evaluation is more time consuming, more complex, and more expensive. Often times the end result is significantly different than what was estimated based on a file review only.

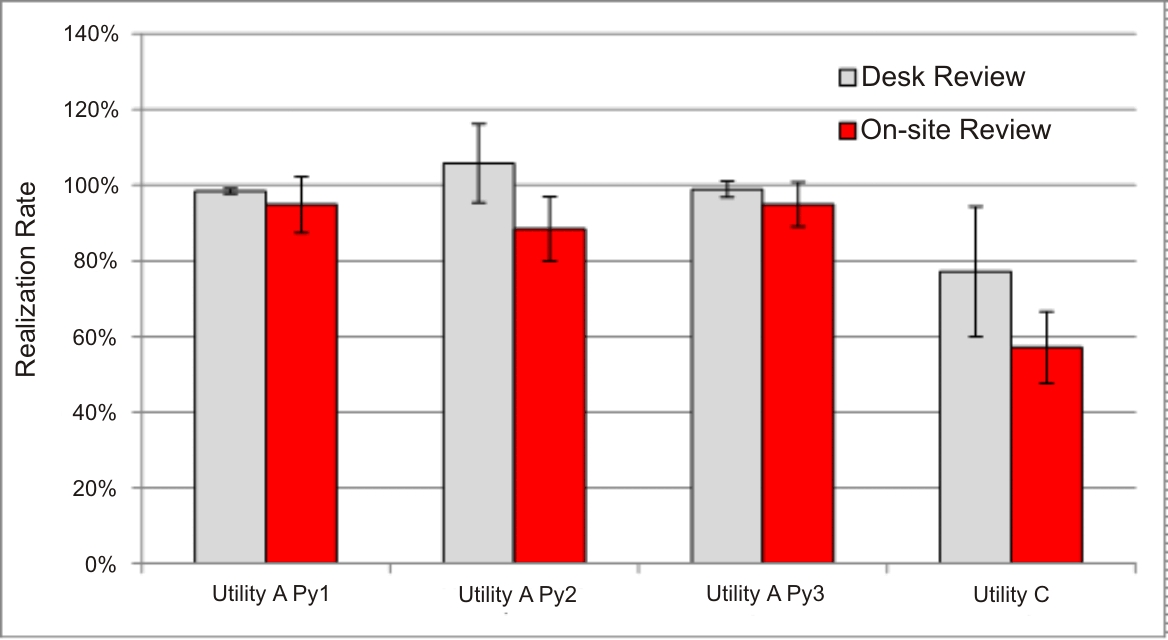

Looking through past evaluations, Michaels’ engineers have reviewed these approaches. Several program waves of verification were assessed for realization rates[2] both after the desk review stage and after the on-site data were collected and analyzed. Results are shown in the chart.

The thing to notice is that the red bars in the chart are always outside of the error lines on the gray bars. This means that the results found after completing the site visits – the more representative results –fell outside the precision and confidence level of the desk review estimates.

For a real life example, let’s play darts. You take your first throw blindfolded (i.e. without all of the possible information) and you hear the dart hit, and think you did a great job. However, once you remove the blindfold (i.e. get more information on site) you realize that your initial throw was so far off the mark, you missed the plywood buffer behind your dart board and put a nice hole in the wall.

Bottom line: gathering more information does provide a different, more accurate result. If the program is large enough, consider including some site visit measurement and verification in the evaluation.